This safely creates a new temporary table if nothing was there before, This method registers theĭataFrame as a table in the catalog, but as this table is temporary, itĬan only be accessed from the specific SparkSession used to create the Spark DataFrame. createTempView() SparkĭataFrame method, which takes as its only argument the name of the To access the data in this way, you have to save it as a temporary table. sql() method) that references your DataFrame will throw an error.

This means that you can use all the Spark DataFrame methods on it, but you can't access the data in other contexts.įor example, a SQL query (using the. The output of this method is stored locally, not in the SparkSession catalog. createDataFrame() method takes a pandas DataFrame and returns a Spark DataFrame. However, maybe you want to go the other direction, and put a pandas DataFrame into a Spark cluster! The SparkSession class has a method for this as well.

#How to install pyspark on windows how to#

In the last exercise, you saw how to move data from Spark to pandas.

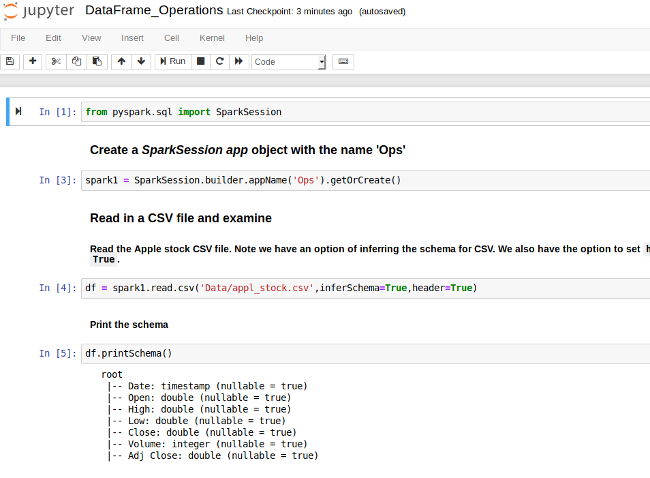

(It's no longer called my_spark because we created it for you!) Remember, we've already created a SparkSession called spark in your workspace. That holds that data, so it wouldn't make sense to pass the table as an This is because there isn't a local object in your environment Is only mentioned in the query, not as an argument to any of the If you look closely, you'll notice that the table flights This method takes a string containing the query and returns a DataFrame with the results! Running a query on this table is as easy as using the. International Airport (PDX) or Seattle-Tacoma International Airport This table contains a row for every flight that left Portland If you don't have anyĮxperience with SQL, don't worry, we'll provide you with queries!Īs you saw in the last exercise, one of the tables in your cluster is the flights Queries on the tables in your Spark cluster. Of the advantages of the DataFrame interface is that you can run SQL You can think of the SparkContext as your connection to the cluster and the SparkSession as your interface with that connection. To start working with Spark DataFrames, you first have to create a SparkSession object from your SparkContext. Implementation has much of this optimization built in! To figure out the right way to optimize the query, but the DataFrame When using RDDs, it's up to the data scientist There are many ways to arrive at the same result, but some often take When you start modifying and combining columns and rows of data, Only are they easier to understand, DataFrames are also more optimized Table with variables in the columns and observations in the rows). The Spark DataFrame was designed to behave a lot like a SQL table (a With directly, so you'll be using the Spark DataFrameĪbstraction built on top of RDDs in the beginning. Low level object that lets Spark work its magic by splitting dataĪcross multiple nodes in the cluster.

#How to install pyspark on windows code#

Take a look at the documentation for all the details!įor the rest of this article you'll have a SparkContext called sc.Īll code examples are taken from the simulated spark cluster in datacamp.Ĭore data structure is the Resilient Distributed Dataset (RDD). You to specify the attributes of the cluster you're connecting to.Īn object holding all these attributes can be created with the SparkConf() constructor. The class constructor takes a few optional arguments that allow Creating a connection to spark:Ĭreating the connection is as simple as creating an instance of the SparkContextĬlass. The master sends the workers data and calculations to run, and they send their results back to the master. The master isĬonnected to the rest of the computers in the cluster, which are called worker. That manages splitting up the data and the computations. There will be one computer, called the master In practice, the cluster will be hosted on a remote machine that'sĬonnected to all other nodes. The first step in using Spark is connecting to a cluster. In this article, we will discuss the introductory part of pyspark and share a lot of learning inspired from datacamp's course. Pyspark is one of the first big data tools and one of the fastest too.

0 kommentar(er)

0 kommentar(er)